Paper accepted at Nature Scientific Data Journal

We are very pleased to announce that our group got a paper accepted at the Scientific Data journal – an open access publication from the Nature Research for the descriptions of scientifically valuable datasets.

We are very pleased to announce that our group got a paper accepted at the Scientific Data journal – an open access publication from the Nature Research for the descriptions of scientifically valuable datasets.

Nature is a weekly international journal publishing the finest peer-reviewed research in all fields of science and technology on the basis of its originality, importance, interdisciplinary interest, timeliness, accessibility, elegance and surprising conclusions. Nature also provides rapid, authoritative, insightful and arresting news and interpretation of topical and coming trends affecting science, scientists and the wider public. Scientific Data is a peer-reviewed, open-access journal for descriptions of scientifically valuable datasets, and research that advances the sharing and reuse of scientific data. It covers a broad range of research disciplines, including descriptions of big or small datasets, from major consortiums to single research groups. Scientific Data primarily publishes Data Descriptors, a new type of publication that focuses on helping others reuse data, and crediting those who share.

Here is the accepted paper with its abstract:

- “A linked open data representation of patents registered in the US from 2005-2017” by Mofeed Hassan, Amrapali Zaveri, Jens Lehmann

Abstract: Patents are widely used to protect intellectual property and a measure of innovation output. Each year, the USPTO grants over 150,000 patents to individuals and companies all over the world. In fact, there were more than 280,000 patent grants issued in the US in 2015. However, accessing, searching and analyzing those patents is often still cumbersome and inefficient. To overcome those problems, Google indexes patents and converts them to Extensible Markup Language (XML) files using Optical Character Recognition (OCR) techniques. In this article, we take this idea one step further and provide semantically rich, machine-readable patents using the Linked Data principles. We have converted the data spanning 12 years – i.e. 2005 – 2017 from XML to Resource Description Framework (RDF) format, conforming to the Linked Data principles and made them publicly available for re-use. This data can be integrated with other data sources in order to further simplify use cases such as trend analysis, structured patent search & exploration and societal progress measurements. We describe the conversion, publishing, interlinking process along with several use cases for the USPTO Linked Patent data.

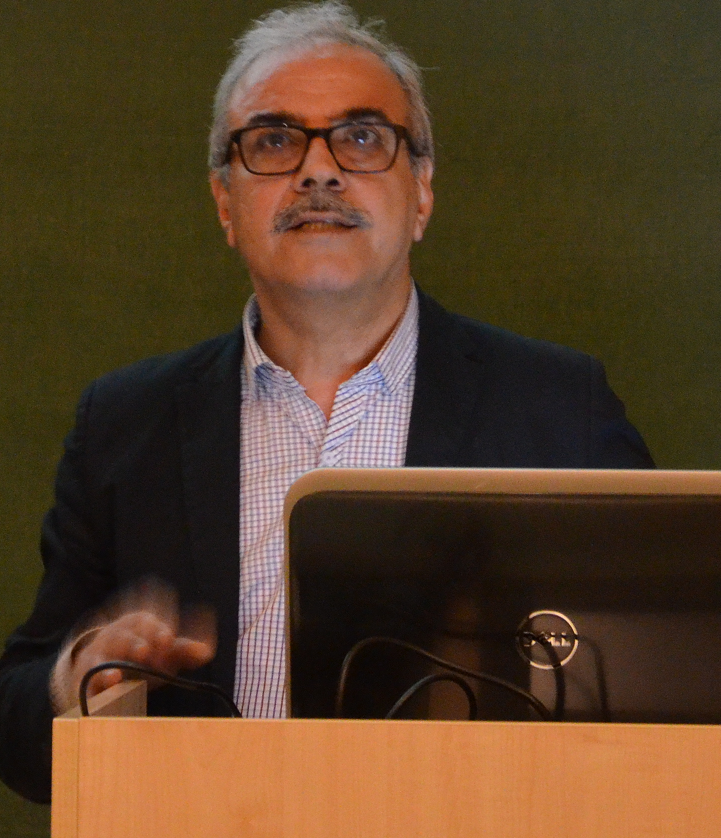

Prof. Dr. John Domingue visits SDA

Prof. John Domingue from The Open University (OU), Milton Keynes, UK visited the SDA group on November 7th, 2018.

Prof. John Domingue from The Open University (OU), Milton Keynes, UK visited the SDA group on November 7th, 2018.

John Domingue is a full Professor at the Open University and Director of the Knowledge Media Institute in Milton Keynes, focusing on research in the Semantic Web, Linked Data, Services, Blockchain, and Education. He also serves as the President of STI International, a semantics focused networking organization which runs the ESWC conference series.

His current work focuses on how a combination of blockchain and Linked Data technologies can be used to process personal data in a decentralized trusted manner and how this can be applied in the educational domain (see http://blockchain.open.ac.uk/). This work is funded by a number of projects. The Institute of Coding is a £20M funded UK initiative which aims to increase the graduate computing skills base in the UK. As leader of the first of five project Themes John Domingue is focusing on the use of blockchain micro-accreditation to support the seamless transition of learners between UK universities and UK industry. From January 2019, he will play a leading role in the EU funded QualiChain project which has the aim of revolutionizing public education and its relationship to the labor market and policy-making by disrupting the way accredited educational titles and other qualifications are archived, managed, shared and verified, taking advantage of blockchain, semantics, data analytics and gamification technologies.

From January 2015 to January 2018 he served as the Project Coordinator for the European Data Science Academy which aimed to address the skills gap in data science across Europe. The project was a success leading to a number of outcomes including a combined data science skills and courses portal enabling learners to find jobs across Europe which match their qualifications.

Prof. Domingue was invited to give a talk “Towards the Decentralisation of Personal Data through Blockchains and Linked Data“ at the Computer Science Colloquium at the University of Bonn co-organized by SDA.

At the bi-weekly “SDA colloquium presentations” he presented KMi and the main research topics of the institute. The goal of Prof. Domingue’s visit was to exchange experience and ideas on decentralized applications using blockchains technologies in combination with Linked Data. In addition to presenting various use-cases where blockchains and linked data technologies have helped communities to get useful insights, Prof. Dr. Domingue shared with our group future research problems and challenges related to this research area. During the meeting, SDA core research topics and main research projects were presented and we investigated suitable topics for future collaborations with Prof. Domingue and his research group.

Papers accepted at JURIX 2018

We are very pleased to announce that our group got one paper accepted for presentation at The 31st international conference on Legal Knowledge and Information Systems (JURIX 2018) conference, which will be held on December 12–14, 2018 in Groningen, The Netherland.

We are very pleased to announce that our group got one paper accepted for presentation at The 31st international conference on Legal Knowledge and Information Systems (JURIX 2018) conference, which will be held on December 12–14, 2018 in Groningen, The Netherland.

JURIX organizes yearly conferences on the topic of Legal Knowledge and Information Systems. The proceedings of the conferences are published in the Frontiers of Artificial Intelligence and Applications series of IOS Press.

The JURIX conference attracts a wide variety of participants, coming from the government, academia, and business. It is accompanied by workshops on topics ranging from eGovernment, legal ontologies, legal XML, alternative dispute resolution (ADR), argumentation, deontic logic, etc.

Here is the accepted paper with its abstract:

- “A Question Answering System on Regulatory Documents” by Diego Collarana, Timm Heuss, Jens Lehmann, Ioanna Lytra, Gaurav Maheshwari, Rostislav Nedelchev, Thorsten Schmidt, Priyansh Trivedi.

Abstract: In this work, we outline an approach for question answering over regulatory documents. In contrast to traditional means to access information in the domain, the proposed system attempts to deliver an accurate and precise answer to user queries. This is accomplished by a two-step approach which first selects relevant paragraphs given a question; and then compares the selected paragraph with user query to predict a span in the paragraph as the answer. We employ neural network-based solutions for each step and compare them with existing, and alternate baselines. We perform our evaluations with a gold-standard benchmark comprising over 600 questions on the MaRisk regulatory document. In our experiments, we observe that our proposed system outperforms other baselines.

Acknowledgment

This research was partially supported by an EU H2020 grant provided for the WDAqua project (GA no. 642795).

Looking forward to seeing you at the JURIX 2018.

Prof. Dr. Khalid Saeed visits SDA

Prof. Dr. Khalid Saeed (ResearchGate) from Bialystok University of Technology, Bialystok, Poland was visiting the SDA group on October 24, 2018.

Prof. Dr. Khalid Saeed (ResearchGate) from Bialystok University of Technology, Bialystok, Poland was visiting the SDA group on October 24, 2018.

Khalid Saeed is a full Professor of Computer Science in the Faculty of Computer Science at Bialystok University of Technology and Faculty of Mathematics and Information Science at Warsaw University of Technology, Poland. He was with AGH Krakow in 2008-2014.

Khalid Saeed received the BSc Degree in Electrical and Electronics Engineering from Baghdad University in 1976, the MSc and PhD Degrees from the Wroclaw University of Technology in Poland in 1978 and 1981, respectively. He was nominated by the President of Poland for the title of Professor in 2014. He received his DSc Degree (Habilitation) in Computer Science from the Polish Academy of Sciences in Warsaw in 2007. He has published more than 200 publications – 23 edited books and 8 text and reference books. He supervised more than 110 MSc and 12 PhD theses. He received more than 20 academic awards. His areas of interest are Image Analysis and Processing, Biometrics and Computer Information Systems.

Prof. Jens Lehmann invited the speaker to the bi-weekly “SDA colloquium presentations”. 20-30 researchers and students from SDA attended. The goal of his visit was to exchange experience and ideas on biometrics applications in daily life, including face recognition, fingerprints, privacy and many more. Apart from presenting various use-cases where biometrics has helped scientists to get useful insights from image analysis and processing and row data, Prof. Dr. Saeed shared with our group future research problems and challenges related to this research area and gave a talk on “Biometrics in everyday life”.

As part of a national BMBF funded project, Prof. Saeed (BUT) is cooperating currently with Fraunhofer IAIS in the field of cognitive engineering, and as an outcome of this visit, we expect to strengthen our research collaboration networks with WUT and BUT, mainly on combining semantic knowledge and Ubiquitous Computing and its applications; Emotion Detection and Kansei Engineering.

Simultaneously, this talk was a continuous networking within EU H2020 LAMBDA project (Learning, Applying, Multiplying Big Data Analytics) and as part of this event, Dr. Valentina Janev from the Institute “Mihajlo Pupin” (PUPIN) was attending the SDA meeting to investigate further networking with potential partners from Poland as well. Among other points, co-organizing coming conferences and writing joint-research papers have been discussed.

SDA at ISWC 2018 and a Best Demo Award

The International Semantic Web Conference (ISWC) is the premier international forum where Semantic Web / Linked Data researchers, practitioners, and industry specialists come together to discuss, advance, and shape the future of semantic technologies on the web, within enterprises and in the context of the public institution.

The International Semantic Web Conference (ISWC) is the premier international forum where Semantic Web / Linked Data researchers, practitioners, and industry specialists come together to discuss, advance, and shape the future of semantic technologies on the web, within enterprises and in the context of the public institution.

We are very pleased to announce that we got 3 papers accepted at ISWC 2018 for presentation at the main conference. Additionally, we also had 5 Posters/Demo papers accepted.

Furthermore, we are very happy to announce that we won the Best Demo Award for the WebVOWLEditor: “WebVOWL Editor: Device-Independent Visual Ontology Modeling” by Vitalis Wiens, Steffen Lohmann, and Sören Auer.

Congratulations to the authors of the best poster and demo! #iswc2018 #iswc_conf #award pic.twitter.com/N2CmdXWf9F

— iswc2018 (@iswc2018) October 12, 2018

Here are some further pointers in case you want to know more about WebVOWL Editor:

- Website:http://editor.visualdataweb.org/

- GitHub: https://github.com/VisualDataWeb/WebVOWL/tree/vowl_editor

- Demo: https://www.youtube.com/watch?v=XWXhpEr9LPY

Among the other presentations, our colleagues presented the following presentations:

- “EARL: Joint Entity and Relation Linking for Question Answering over Knowledge Graphs” by Mohnish Dubey, Debayan Banerjee, Debanjan Chaudhuri and Jens Lehmann

Mohnish Dubey presented EARL: A relation & entity linking for DBpedia Question Answering on LC-QuAD via Elasticsearch using fastText embeddings and LSTM. It proposed two fold approaches, using GTSP solver and connection density (3 features) classifier for adaptive re-ranking.@MohnishDubey is presenting “EARL: Joint Entity and Relation Linking for Question Answering over Knowledge Graphs” for the Research Track at #iswc2018 https://t.co/TCaWRGGqf9 pic.twitter.com/Csc8aOLdjZ

— SDA Research (@SDA_Research) October 10, 2018

GitHub: https://github.com/AskNowQA/EARL

Slides: https://www.slideshare.net/MohnishDubey/earl-joint-entity-and-relation-linking-for-question-answering-over-knowledge-graphs

Demo: https://earldemo.sda.tech/ - “DistLODStats: Distributed Computation of RDF Dataset Statistics” by Gezim Sejdiu, Ivan Ermilov, Jens Lehmann and Mohamed Nadjib Mami

Gezim Sejdiu presented DistLODStats, a novel software component for distributed in-memory computation of RDF Datasets statistics implemented using the Spark framework. The tool is maintained and has an active community due to its integration into the larger framework, SANSA.@Gezim_Sejdiu is presenting “DistLODStats: #Distributed Computation of #RDF #Dataset #Statistics” for the Resources Track at #iswc2018 https://t.co/yszIduYevG pic.twitter.com/wnBYHEIlx4

— SDA Research (@SDA_Research) October 11, 2018

GitHub: https://github.com/SANSA-Stack/SANSA-RDF

Slides: https://www.slideshare.net/GezimSejdiu/distlodstats-distributed-computation-of-rdf-dataset-statistics-iswc-2018-talk - “Synthesizing Knowledge Graphs from web sources with the MINTE+ framework” by Diego Collarana, Mikhail Galkin, Christoph Lange, Simon Scerri, Sören Auer and Maria-Esther Vidal

Diego Collarana presented the synthesizing KG from different web sources using MINTE+, an RDF Molecule-Based Integration Framework, in three domain-specific applications.

@collarad is presenting “Synthesizing #Knowledge #Graphs from #web sources with the MINTE+ framework” for the In-Use Track at #iswc2018 https://t.co/Dl3Jddmgeu pic.twitter.com/fMmapEcqeK

— SDA Research (@SDA_Research) October 10, 2018

GitHub: https://github.com/RDF-Molecules/MINTE

Slides: https://docs.google.com/presentation/d/1tV1tEuIMJoOhaTvlsgndi4YTk5ZoIuBfl9Bi3XbVr0c/edit?usp=sharing

Demo: https://youtu.be/6bNP21XSu6s

Workshops

- Visualization and Interaction for Ontologies and Linked Data (VOILA 2018)

Steffen Lohmann co-organized the International Workshop on Visualization and Interaction for Ontologies and Linked Data (VOILA 2018) for the third time at ISWC. Overall, more than 40 researchers and practitioners took part in this full-day event featuring talks, discussions, and tool demonstrations, including an interactive demo session. The workshop proceedings are published as CEUR-WS vol. 2187.

ISWC18 was a great venue to meet the community, create new connections, talk about current research challenges, share ideas and settle new collaborations. We look forward to the next ISWC conference.

Until then, meet us at SDA!

Paper accepted at the Journal of Web Semantics

We are very pleased to announce that our group got a paper accepted at the Journal of Web Semantics on Managing the Evolution and Preservation of the Data Web (MEPDaW) issue.

We are very pleased to announce that our group got a paper accepted at the Journal of Web Semantics on Managing the Evolution and Preservation of the Data Web (MEPDaW) issue.

The Journal of Web Semantics is an interdisciplinary journal based on research and applications of various subject areas that contribute to the development of a knowledge-intensive and intelligent service Web. These areas include knowledge technologies, ontology, agents, databases and the semantic grid, obviously, disciplines like information retrieval, language technology, human-computer interaction, and knowledge discovery are of major relevance as well. All aspects of Semantic Web development are covered. The publication of large-scale experiments and their analysis is also encouraged to clearly illustrate scenarios and methods that introduce semantics into existing Web interfaces, contents, and services. The journal emphasizes the publication of papers that combine theories, methods, and experiments from different subject areas in order to deliver innovative semantic methods and applications.

Here is the pre-print of the accepted paper with its abstract:

- “TISCO: Temporal Scoping of Facts” by Anisa Rula, Matteo Palmonari, Simone Rubinacci, Axel-Cyrille Ngonga Ngomo, Jens Lehmann, Andrea Maurino and Diego Esteves

Abstract: Some facts in the Web of Data are only valid within a certain time interval. However, most of the knowledge bases available on the Web of Data do not provide temporal information explicitly. Hence, the relationship between facts and time intervals is often lost. A few solutions are proposed in this field. Most of them are concentrated more in extracting facts with time intervals rather than trying to map facts with time intervals. This paper studies the problem of determining the temporal scopes of facts, that is, deciding the time intervals in which the fact is valid. We propose a generic approach which addresses this problem by curating temporal information of facts in the knowledge bases. Our proposed framework, Temporal Information Scoping (TISCO) exploits evidence collected from the Web of Data and the Web. The evidence is combined within a three-step approach which comprises matching, selection and merging. This is the first work employing matching methods that consider both a single fact or a group of facts at a time. We evaluate our approach against a corpus of facts as input and different parameter settings for the underlying algorithms. Our results suggest that we can detect temporal information for facts from DBpedia with an f-measure of up to 80%.

Acknowledgment

This research has been supported in part by the research grant number 17A209 from the University of Milano-Bicocca and by a scholarship from the University of Bonn

Papers accepted at EMNLP 2018 / FEVER & W-NUT Workshops

We are very pleased to announce that our group got 3 workshop papers accepted for presentation at EMNLP 2018 conference, that will be held on 1st of November 2018, Brussels, Belgium.

We are very pleased to announce that our group got 3 workshop papers accepted for presentation at EMNLP 2018 conference, that will be held on 1st of November 2018, Brussels, Belgium.

FEVER: The First Workshop on Fact Extraction and Verification: With billions of individual pages on the web providing information on almost every conceivable topic, we should have the ability to collect facts that answer almost every conceivable question. However, only a small fraction of this information is contained in structured sources (Wikidata, Freebase, etc.) – we are therefore limited by our ability to transform free-form text to structured knowledge. There is, however, another problem that has become the focus of a lot of recent research and media coverage: false information coming from unreliable sources. In an effort to jointly address both problems, a workshop promoting research in joint Fact Extraction and VERification (FEVER) has been proposed.

W-NUT: The 4th Workshop on Noisy User-generated Text: focuses on Natural Language Processing applied to noisy user-generated text, such as that found in social media, online reviews, crowdsourced data, web forums, clinical records and language learner essays.

Here are the accepted papers with their abstracts:

- Belittling the Source: Trustworthiness Indicators to Obfuscate Fake News on the Web by Diego Esteves, Aniketh Janardhan Reddy, Piyush Chawla and Jens Lehmann.

Abstract: With the growth of the internet, the number of fake-news online has been proliferating every year. The consequences of such phenomena are manifold, ranging from lousy decision-making process to bullying and violence episodes. Therefore, fact-checking algorithms became a valuable asset. To this aim, an important step to detect fake-news is to have access to a credibility score for a given information source. However, most of the widely used Web indicators have either been shut-down to the public (e.g., Google PageRank) or are not free for use (Alexa Rank). Further existing databases are short-manually curated lists of online sources, which do not scale. Finally, most of the research on the topic is theoretical-based or explore confidential data in a restricted simulation environment. In this paper we explore current research, highlight the challenges and propose solutions to tackle the problem of classifying websites into a credibility scale. The proposed model automatically extracts source reputation cues and computes a credibility factor, providing valuable insights which can help in belittling dubious and confirming trustful unknown websites. Experimental results outperform state of the art in the 2-classes and 5-classes setting.

Abstract: Named Entity Recognition (NER) is an important subtask of information extraction that seeks to locate and recognise named entities. Despite recent achievements, we still face limitations in correctly detecting and classifying entities, prominently in short and noisy text, such as Twitter. An important negative aspect in most of NER approaches is the high dependency on hand-crafted features and domain-specific knowledge, necessary to achieve state-of-the-art results. Thus, devising models to deal with such linguistically complex contexts is still challenging. In this paper, we propose a novel multi-level architecture that does not rely on any specific linguistic resource or encoded rule. Unlike traditional approaches, we use features extracted from images and text to classify named entities. Experimental tests against state-of-the-art NER for Twitter on the Ritter dataset present competitive results (0.59 F-measure), indicating that this approach may lead towards better NER models.

- DeFactoNLP: Fact Verification using Entity Recognition, TFIDF Vector Comparison and Decomposable Attention by Aniketh Janardhan Reddy and Gil Rocha and Diego Esteves.

Abstract: In this paper, we describe DeFactoNLP, the system we designed for the FEVER 2018 Shared Task. The aim of this task was to conceive a system that can not only automatically assess the veracity of a claim but also retrieve evidence supporting this assessment from Wikipedia. In our approach, the Wikipedia documents whose Term Frequency-Inverse Document Frequency (TFIDF) vectors are most similar to the vector of the claim and those documents whose names are similar to those of the named entities (NEs) mentioned in the claim are identified as the documents which might contain evidence. The sentences in these documents are then supplied to a textual entailment recognition module. This module calculates the probability of each sentence supporting the claim, contradicting the claim or not providing any relevant information to assess the veracity of the claim. Various features computed using these probabilities are finally used by a Random Forest classifier to determine the overall truthfulness of the claim. The sentences which support this classification are returned as evidence. Our approach achieved a 0.4277 evidence F1-score, a 0.5136 label accuracy and a 0.3833 FEVER score.

Acknowledgment

This research was partially supported by an EU H2020 grant provided for the WDAqua project (GA no. 642795) and by the DAAD under the “International promovieren in Deutschland fur alle” (IPID4all) project.

Looking forward to seeing you at The EMNLP/FEVER 2018.

Papers accepted at EKAW 2018

We are very pleased to announce that our group got 2 papers accepted for presentation at The 21st International Conference on Knowledge Engineering and Knowledge Management (EKAW 2018) conference, which will be held on 12 – 16 November 2018 in Nancy, France.

We are very pleased to announce that our group got 2 papers accepted for presentation at The 21st International Conference on Knowledge Engineering and Knowledge Management (EKAW 2018) conference, which will be held on 12 – 16 November 2018 in Nancy, France.

The 21st International Conference on Knowledge Engineering and Knowledge Management is in concern with all aspects about eliciting, acquiring, modeling and managing knowledge, and the construction of knowledge-intensive systems and services for the semantic web, knowledge management, e-business, natural language processing, intelligent information integration, and so on. The special theme of EKAW 2018 is “Knowledge and AI”. We are indeed calling for papers that describe algorithms, tools, methodologies, and applications that exploit the interplay between knowledge and Artificial Intelligence techniques, with a special emphasis on knowledge discovery. Accordingly, EKAW 2018 will put a special emphasis on the importance of Knowledge Engineering and Knowledge Management with the help of AI as well as for AI.

Here is the list of accepted papers with their abstracts:

- “Divided we stand out! Forging Cohorts fOr Numeric Outlier Detection in large scale knowledge graphs (CONOD)” by Hajira Jabeen, Rajjat Dadwal, Gezim Sejdiu, and Jens Lehmann.

Abstract : With the recent advances in data integration and the concept of data lakes, massive pools of heterogeneous data are being curated as Knowledge Graphs (KGs). In addition to data collection, it is of utmost importance to gain meaningful insights from this composite data. However, given the graph-like representation, the multimodal nature, and large size of data, most of the traditional analytic approaches are no longer directly applicable. The traditional approaches could collect all values of a particular attribute, e.g. height, and try to perform anomaly detection for this attribute. However, it is conceptually inaccurate to compare one attribute representing different entities, e.g.~the height of buildings against the height of animals. Therefore, there is a strong need to develop fundamentally new approaches for the outlier detection in KGs. In this paper, we present a scalable approach, dubbed CONOD, that can deal with multimodal data and performs adaptive outlier detection against the cohorts of classes they represent, where a cohort is a set of classes that are similar based on a set of selected properties. We have tested the scalability of CONOD on KGs of different sizes, assessed the outliers using different inspection methods and achieved promising results.

Abstract : Although the use of apps and online services comes with accompanying privacy policies, a majority of end-users ignore them due to their length, complexity and unappealing presentation. In light of the, now enforced EU-wide, General Data Protection Regulation (GDPR) we present an automatic technique for mapping privacy policies excerpts to relevant GDPR articles so as to support average users in understanding their usage risks and rights as a data subject. KnIGHT (Know your rIGHTs), is a tool that finds candidate sentences in a privacy policy that are potentially related to specific articles in the GDPR. The approach employs semantic text matching in order to find the most appropriate GDPR paragraph, and to the best of our knowledge is one of the first automatic attempts of its kind applied to a company’s policy. Our evaluation shows that on average between 70-90% of the tool’s automatic mappings are at least partially correct, meaning that the tool can be used to significantly guide human comprehension. Following this result, in the future we will utilize domain-specific vocabularies to perform a deeper semantic analysis and improve the results further.

Acknowledgment

This work was partly supported by the EU Horizon2020 projects WDAqua (GA no.~642795), Boost4.0 (GA no.~780732) and BigDataOcean (GA no.~732310) and DAAD.

Looking forward to seeing you at The EKAW 2018.

Paper accepted at CoNLL 2018

We are very pleased to announce that our group got one paper accepted for presentation at The SIGNLL Conference on Computational Natural Language Learning (CoNLL 2018) conference. CoNLL is a top-tier conference, yearly organized by SIGNLL (ACL’s Special Interest Group on Natural Language Learning). This year, CoNLL will be colocated with EMNLP 2018 and will be held on October 31 – November 1, 2018, Brussels, Belgium.

We are very pleased to announce that our group got one paper accepted for presentation at The SIGNLL Conference on Computational Natural Language Learning (CoNLL 2018) conference. CoNLL is a top-tier conference, yearly organized by SIGNLL (ACL’s Special Interest Group on Natural Language Learning). This year, CoNLL will be colocated with EMNLP 2018 and will be held on October 31 – November 1, 2018, Brussels, Belgium.

The aim of the CoNLL conference is to bring researchers and practitioners from both academia and industry, in the areas of deep learning, natural language processing, and learning. It is among the top-10 Natural language processing and Computational linguistics conferences.

Here is the accepted paper with its abstract:

- “Improving Response Selection in Multi-turn Dialogue Systems by Incorporating Domain Knowledge” by Debanjan Chaudhuri, Agustinus Kristiadi, Jens Lehmann and Asja Fischer.

Abstract : Building systems that can communicate with humans is a core problem in Artificial Intelligence. This work proposes a novel neural network architecture for response selection in an end-to-end multi-turn conversational dialogue setting. The architecture applies context level attention and incorporates additional external knowledge provided by descriptions of domain-specific words. It uses a bi-directional Gated Recurrent Unit (GRU) for encoding context and responses and learns to attend over the context words given the latent response representation and vice versa. In addition, it incorporates external domain specific information using another GRU for encoding the domain keyword descriptions. This allows better representation of domain-specific keywords in responses and hence improves the overall performance. Experimental results show that our model outperforms all other state-of-the-art methods for response selection in multi-turn conversations.

Acknowledgement

This research was supported by the KDDS project at Fraunhofer.

Looking forward to seeing you at The CoNLL 2018.

AskNow 0.1 Released

Dear all,

the Smart Data Analytics group is happy to announce AskNow 0.1 – the initial release of Question Answering Components and Tools over RDF Knowledge Graphs.

Website: http://asknow.sda.tech/

Demo: http://asknowdemo.sda.tech

GitHub: https://github.com/AskNowQA

The following components with corresponding features are currently supported by AskNow:

- EARL 0.1 EARL performs entity linking and relation linking as a joint task. It uses machine learning in order to exploit the Connection Density between nodes in the knowledge graph. It relies on three base features and re-ranking steps in order to predict entities and relations.

ISWC 2018: https://arxiv.org/pdf/1801.03825.pdf

Demo: https://earldemo.sda.tech/

Github: https://github.com/AskNowQA/EARL

- SQG 0.1: This is a SPARQL Query Generator with modular architecture. SQG enables easy integration with other components for the construction of a fully functional QA pipeline. Currently entity relation, compound, count, and boolean questions are supported.

ESWC 2018: http://jens-lehmann.org/files/2018/eswc_qa_query_generation.pdf

Github: https://github.com/AskNowQA/SQG

- AskNow UI 0.1: The UI interface works as a platform for users to pose their questions to the AskNow QA system. The UI displays the answers based on whether the answer is an entity or a list of entities, boolean or literal. For entities it shows the abstracts from DBpedia.

Github: https://github.com/AskNowQA/AskNowUI

- SemanticParsingQA 0.1: The Semantic Parsing-based Question Answering system is built on the integration of EARL, SQG and AskNowUI.

Demo: http://asknowdemo.sda.tech/

Github: https://github.com/AskNowQA/SemanticParsingQA

We want to thank everyone who helped to create this release, in particular the projects HOBBIT, SOLIDE, WDAqua, BigDataEurope.

View this announcement on Twitter: https://twitter.com/AskNowQA/status/1040205350853599233

Kind regards,

The AskNow Development Team

(http://asknow.sda.tech/people/)