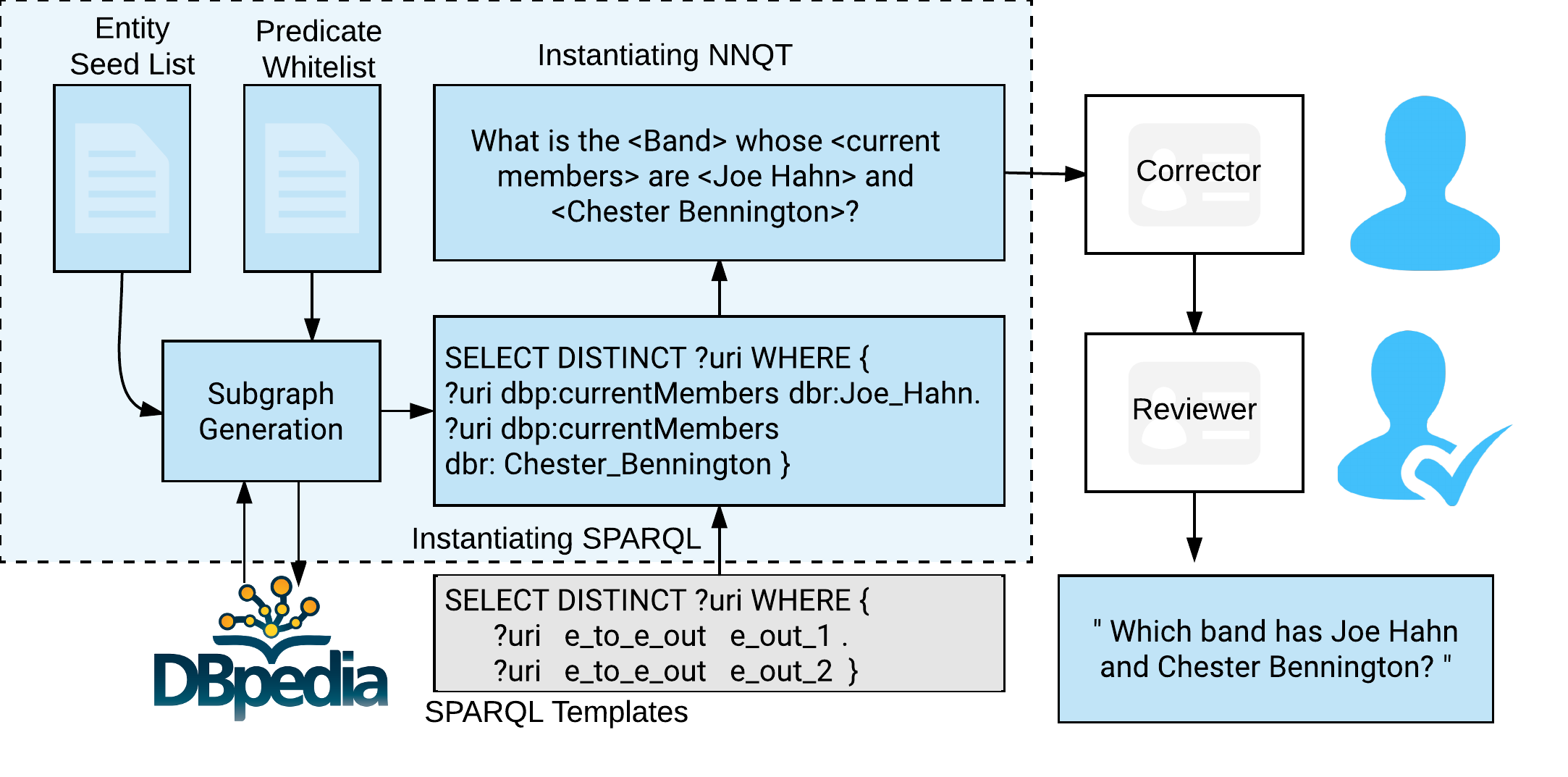

This project aims to create a QA data set with the pair of Natural Language question to their corresponding SPARQL query. The incentive is to create a good dataset for QA system training and achieve a scale which helps Neural Network based QA Systems too. We frame our question generation problem as a transduction problem, in which the subgraph generated by the seed entity is fitted into a set of SPARQL templates which are then converted into a normalized natural question structure (NNQS). This acts as a generic structure which is then transformed easily into NL question having lexical and syntactic variations, by English speakers. These NL questions were then reviewed by domain experts, ensuring the quality of the dataset.

Dataset Generation Workflow

Using a list of seed entities, and filtering by a predicate whitelist, we generate subgraphs of DBpedia to instantiate SPARQL templates, thereby generating valid SPARQL queries. These SPARQL queries are then used to instantiate NNQTs and generate questions (which are often grammatically incorrect). These questions are manually corrected and paraphrased. This is then reviewed and optionally edited by the reviewer

JSON Structure

The dataset generated has the following JSON structure

{

template_id: "Every unique SPARQL template has a different ID.",

sparql_template: "A query where resources in the triple pattern are replaced with placeholders.",

sparql_query: "Valid SPARQL query generated by using the triples in subgraphs to fill the placeholder resources in SPARQL Templates.",

verbalized_question: "The automatically verbalized equivalent of the SPARQL Query.",

corrected_question: "Human corrected version of the verbalized question.",

_id: "Unique ID generated for every data node."

}

Publications

A Corpus for Complex Question Answering over Knowledge Graphs

A Corpus for Complex Question Answering over Knowledge Graphs